A while back I started working on a hobby project that tackled one specific problem at work: Ingestion and Retrieval of data. While this task itself has been tackled numerous times before, it had different combinations and aspects that made it slightly complicated and thus challenging.

- Data would be of structured and unstructured nature without particular ratio. Think of something like a form combined with check boxes but also a major portion containing free text input.

- Said data had to be available as soon as possible, i.e. near real time processing or even real time streaming.

Design Considerations

While such pipelines are obviously easy and fast to assemble with even open source and license-free applications, I wanted to cram all of that in very low resource systems, i.e. think in order of Raspberry Pi (3, not 5 😜). So isolating requirements, I wanted to make something that is highly optimised for:

- RAM Usage as low as possible (as few microservices and internal daemons as possible)

- lightweight docker images

- threading and background workers (async and bulk submissions)

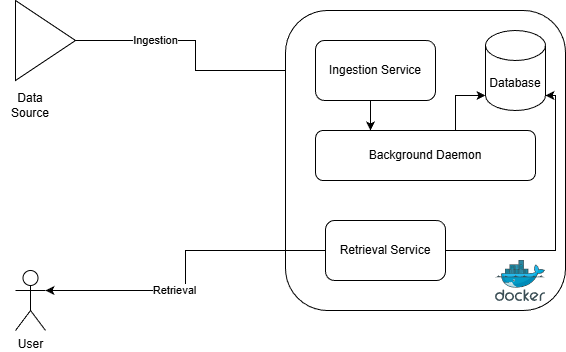

Architecture

While there are unlimited possibilities, I decided to keep the process fairly simple.

- A ingestion service takes on data, does pre-computational checks (validity, metadata) and preparation for ingestion

- A background service ingests the data to proper backend. Indices are created by backend itself.

- During data retrieval, retrieval service directly fetches data from backend.

Design Principle

During implementation, adhering to multitier architecture seemed inevitable as such an application would need to be able to scale individual components i.e. layers in order to scale globally.

- Presentation Layer: Frontend was written in pure HTML, CSS and JS. I wanted to keep the work to minimal while not wanting to reinvent the wheel so I opted for plotly.js.

- Application Layer: As discussed above, there are multiple services and controllers which can be swapped inplace and updated without affecting the function of other components.

- Business Logic Layer: This was probably the easiest component to implement as there were basically so few constraints regarding data quality and pre-ingestion checks. Obviously accounted for backend in case in future such implementation is required.

- Data Access Layer: DAL was probably most time consuming to program. Data transfer and mapping is implemented in pure pythonic way with ORM

sqlalchemyandpydanticwith most care taken for events where data conversion is done for custom metrics. - Database Layer: Database was good ol' Postgres. Obviously swappable anytime with bindings on ORM but who would do that 😜 Data migration and versioning is done with

alembic to ensure database is in sync with code changes.

Security

Whole DB with Logs is encrypted using Fernet(Fernet Documentation) to ensure utmost security of logs. However this obviously has downside as it increases need on system resource needed to encrypt data during ingestion and decrypt using fetch. Transfer Layer Security obviously handled using TLS1.3 so transfer from client to server takes place under HTTPS TLS and we use Fernet for encryption at rest.

For authentication, Token based authentication is used with tokens having predefined time to live (TTL) which can be configured via Admin control panel. OAuth2 with OpenID Connect can be used if federated access control already exists.

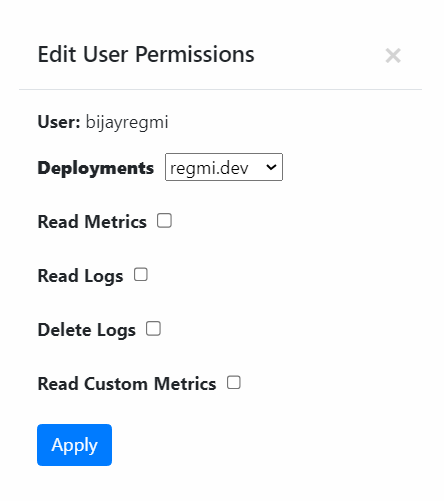

Role based access control (RBAC) ensures every user has only access to metrics/logs they need to see. Following controls are possible:

- Deployment level: User can be granted access to particular deployment only

- Metrics: User can be assigned read permissions on a deployment metrics.

- Logs: User can be assigned read or delete log permissions for deployment.

- Custom Metrics: Custom Metrics are base for extension of app via plugins. A user can be assigned access to read custom metrics for certain deployment.

Performance Benchmarks

While all of this is nothing new, I was very curious to see how this architecture scaled in regards to resource pressure vs. http requests (ingestion/retrieval) / second.

Ingestion/Retrieval Performance

Tests were done using ali v0.7.5 (ali Repo) to press the most juice out of the system with following configuration:

- NGINX as reverse proxy using 768 connection workers

- System had 8GB RAM

- Duration of 10 minutes

- Post Payload of 10 records in bulk and 1 record in single insertion

- Client side 768 workers (56 Core Intel Server as client to eliminate as much client issues as possible)

- Retrieval done with metrics : CPU, GPU, RAM, Storage, Network IO (25 each per request)

Results

Type | Req/s | Latency | RAM | CPU | NetIO/In | NetIO/Out | Throughput | Comment |

Ingestion | 820 | 200ms | 8GB | 100% | 12% | 12% | 820 recs | |

Ingestion Bulk | 413 | 200ms | 8GB | 100% | 15% | 15% | 2065 recs | bigger payload possible here |

Type | Req/s | Latency | RAM | CPU | NetIO/In | NetIO/Out | Throughput | Comment |

Retrieval | 1300 | 800ms | 8GB | 100% | 12% | 46% | 195k recs | req * 25 * 6 |

As we can see from benchmarks, the biggest bottleneck comes from handling the ingestion and retrieval per worker rather than DB backend itself.

Scaling

I have been using this project in big scale to generate views upon timeseries data in production with large ingestion volumes handling around 1k ingestions per second with perfectly timed bulk insertions and aggregations with around 2GB memory pressure in most of request lifecycle. Obviously this can be tuned more with DB optimisations and maybe multiplexing HTTP/2 connections (connections are basically reused so TCP connection dance at beginning of every request is spared) but I am very positively surprised with results with very high level tuning of these parameters.

So far 180GB of metrics and logs have been ingested in period of last 15 days and it seems to be running without problems.

Summary

This project started out as a hobby project. One day I plan on open sourcing this project as it matures in production and last bit of issues are resolved. If you want to stay up to date, follow my GitHub profile regmibijay

Thanks for taking time to read through all this!

B. Regmi (bijay@regmi.dev)

Log And Metrics Retrieval at Scale