Precap

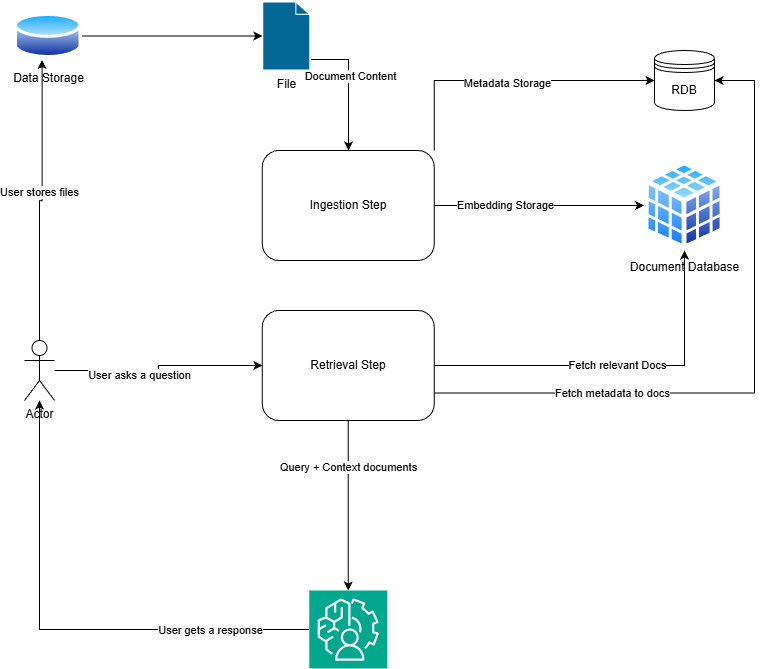

Retrieval-augmented Generation (RAG) might be one of the most hyped technologies in Natural Language Processing (NLP) currently. While it is simple in concept: You index bunch of data and everytime a user wants to know something from this bunch, you run similarity algorithms to find portion of data that might answer this question and ask LLM to generate an answer. However! amount of adjustments and tweaks needed in the system is vast and intensive and requires deep understanding of many fields such as data parsing, algorithms to retrieve interesting data for query i.e. similarity algorithms and of course generative AI that can augment answers fast and precisely. In my opinion the first step of the process, i.e. ingestion is of the most challenging tasks as often in production you will be left with feature requests to support a broad spectrum of data formats (PDF, DOCX, XLSX etc.) and connections to data sources (Web, S3, Azure Blobstorage etc.). At first glance it is fairly easy and straight forward to get a system going and get to first half of the good product. You might get your system to TRI 4-5 and you get a good funding and your stake holders are very happy. Then the task gets complicated: First batch of very old but crucial documents start causing trouble during ingestion and improper parsing causes unforseen issues with your retrieval and reranking algorithms.

Ingestion

The common mistake I see which turns rather into blunder in later stages is pretending all data sources are built same and top priority is to extract text from document. This might be a good approach when your documents only contain paragraphs of text but most real world documents do not. They contain images that are relevant to context, tables, headers and footers that say a lot about content itself. While extracting all of this context is not always possible and very tricky, there is a lot of aid available from the open source community:

- pymupdf4llm (Documentation) has been my go-to PDF parser for low-effort parsing.

- OmniParse (GitHub) is sort of nice to have something parse the document real quick but it is highly compute intensive.

Current bottleneck in transforming a document in complete context i.e. complete page content to LLM-formatted stems from variety of sources not having an uniform standard. Even within well established formats like PDFs there are variety of standards and versions that can not be treated same. Also most of the time you will probably have huge dataset stored in some source that makes it hard to fetch data from. It can be bandwith limiting (e.g. OneDrive) or even it might just be plain hard to deal with (e.g. Samba) properly.

Fetching Documents

While working with numerous data sources with broad combination of on-premise and cloud warehouses, I often faced same problem: Efficiently gathering and processing the content. Different sources would transfer files at different speed, often not indicate the bandwidth properly and generally just abort connection amidst transmission. Then there are services that indicate useful metadata such as last modification time and file sizes that makes it very easy to filter through files that might be too big or might already have been ingested since last modification and saves you all the hassle.

Retry-Mechanism

If fetching document content failes, generally it is a good idea to add retry mechanisms for certain cases as restarting same dance with preparation of ingesting same files (be it at later times) is time and compute intensive. With well established services that provide RESTful APIs, time and time again people seem to ignore RFC 6585 proposal to add HTTP429 with which server indicates that a client might be sending too many requests and returns a retry-afterheader response that client should take into account while reinitiating request. This will immensely reduce hassle as you can just wait so long to be able to download files again. Similar standards are available on protocols like credits that help reduce client spams in SMB-Protocol.

Handling Transmission Errors

Even if rate limit is taken care of, you might run into sporadic issues where transmission is either aborted or fails temporarily. In such cases, it is highly advisable to account for transmission errors. Often there are file validity checks that help you assert file download was indeed completed without errors but personally I find them rather not worth the compute, as the rate of identification is significantly low if you account for transmission failures via network.

Data Corruption

Most of the time, especially dealing with data warehouses housing legacy and historical data, it is very likely you will stumble upon old formats. These data files are often very hard to deal with and might contain some important data but likely this data was migrated to newer formats if it was so important. In my experience most of documents stored in old formats are most certainly annoying to deal with and do not provide much value. The amount of times my indexing workers get stuck in some XLS file that is corrupted in production is not negligible. I think the best practice here is to get everything you can with low effort and skip the ones causing trouble.

Processing Data

Mostly processing data after its being downloaded contains steps like chunking, embedding and storing in database. Depending upon file types, there might be different approaches much more suitable for each file type and format.

Preprocessing

Given you have transferred successfully and file can be read, you might want to ensure data is of good quality and even preprocess it. Often strategies like pre-sorting data based on quality assessment, relevance and permissions etc. can lead to faster ingestion times. Lemmatizing text reduces text content while preserving the gist and making handling this content much easier.

Data Format

Best approach while ingesting data is accounting for content and adapting chunking and blurbing strategies accordingly. For example for chunking paragraphs, semantic chunking is inevitable if you want to preserve context and for tables, semantic chunking will probably not make much sense. Obviously this carries out to spreadsheets. Apply proper mechanisms based on file type and with libraries like pymupdfyou can even detect tables with great consistency inside pdfs and chunk these parts so table context stays preserved.

Metadata

Usually text file content is not the only data you might be interested in about a document, You might want data like last modified date, access rulesets, content type etc. to further improve user experience and security of system. This is especially crucial if you want to implement features like Role Based Access Control (RBAC)that enhance security of file content and it is best practice to not maintain such table yourself but adhere to such system from origin of file content (i.e. OneDrive, S3 etc) which have much more robust security and this helps you reduce synchronisation times in case of changes to such tables.

Summary

While ingesting data at scale, you might need to reconsider time savings that scale up. Retry mechanisms help reduce overhead setup time and significantly improve ingestion times. Adapting data processing techniques lead to better ingestion quality and better answers during query time. Metadata help control different aspects during retrieval process and helps personalise experience of each user.

While this topic is vast and I might have not covered all aspects, if you would like to add something to this or have questions, feel free to contact me!

Thanks for taking time to read through all this!

B. Regmi (bijay@regmi.dev)

Processing Unstructured Data at Scale for RAG