This year during some corporate meeting in spring, I was asked to find a way to make a huge amount of scattered data available for search and interaction efficiently as it was costing hours of human effort for just to find the right data i.e. files, emails, notes etc. The obvious solution to this problem was the implementation of a variety of retrieval augmented generation (RAG). At this point we knew what to implement but not necessarily how, given proposals to make it available for thousands of users with half a petabyte of unstructured data indexed.

Pain Points

Except for obvious concerns like data protection and role-based access control (RBAC), we had more factors that were challenging the application of such caliber in production.

Interface

As most of the users had only seen a handful of AI Applications but were not familiar with much of GenAI products out there and what challenges lie within, we had to make a sort of "iPhone Product". A product that you turn on and it is so intuitive anyone without a broad introduction can use and a product that just works. Something so simple even people, who didn't know how LLMs worked, could hop on and get stuff done.

Accuracy

Looking good is mostly half of the battle. A product like this is mostly dead on arrival when it fails its main task: making searching and interaction with files as accurate as possible. After all, the main goal is to waste as little human time as possible in finding right data. Therefore, some state-of-the-art technology, that had a good balance between speed and accuracy was what we were after.

Costs

Both shiny interface and accuracy are very achievable with unlimited budget, which we unfortunately did not have. Finding something that could provide cost/performance value is often hard, especially when you are looking for products that are well built and scalable in AI.

Integration

Such an application would need to seamlessly integrate into data storages across Microsoft Products, Warehouses and custom built storage solutions. Something that would make connecting data into the application as painless as possible while being fast at doing so.

Onyx (formerly Danswer)

After trying out multiple open source RAG-Applications, I fell in love with Onyx instantly. I set a meeting with stakeholders after playing around locally a bit and all I said was "I have something you have been looking for. Let the product speak for itself." To cut this dramatic and emotional script short, they obviously loved it 😂

We developed a fork of Onyx for around 8 months, creating our own version with required services.

Written in Python (backend) and Typescript (frontend), Onyx has a very intuitive design which can be mostly tuned in a way you would want with minimal effort and provides a very good interface as starting point for customization, which was exactly what we were after. The license is MIT (except for some EE parts) and has a nice and welcoming community, reachable via Slack.

Deployments can be done either via provided Docker compose files as a stack of microservices or with charts in Kubernetes.

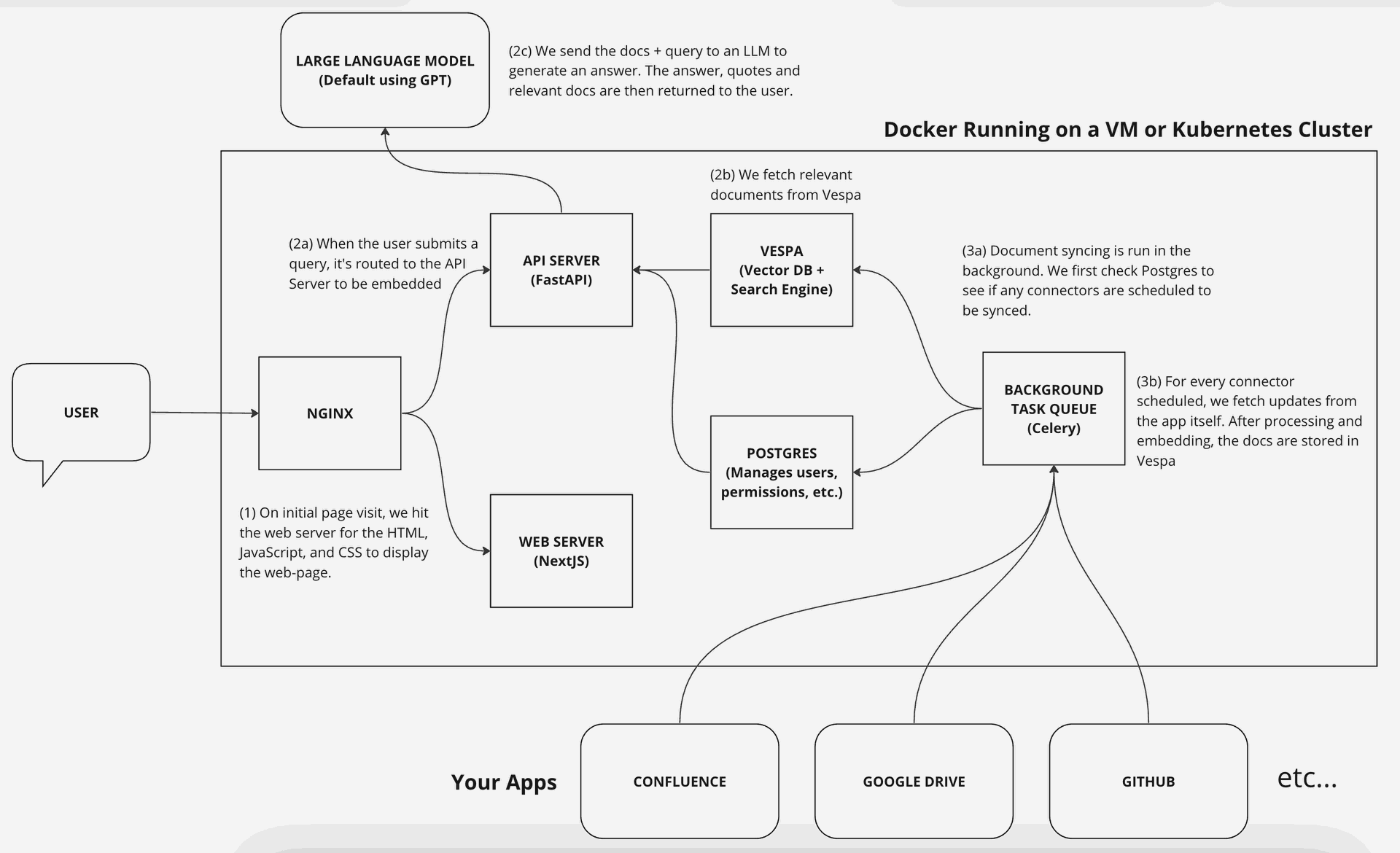

System Architecture

Image from Onyx Documentation

Frontend

Frontend is written in Typescript and detached so it can be run separately as a microservice. It requires very low resources to run and is therefore performant.

A Nginx container takes user requests, reroutes the request based on resource and passes it onto the NextJS server.

Backend

Most of backend services are written in Python, except for PostgreSQL and Vespa obviously. Each of these services are its own container and in the following section, we will discuss the scaling part individually.

API Service

API Server is the main part of function, as it orchestrates connection between user, relational database, document database and indexing and embedding operations. It contains main logic for AI Magic and ensures services are in sync.

Since this service is mainly responsible for speed and interaction of the stack with user, it is of utmost priority to make sure it has resources needed to run well. In contrast to current implementation of Onyx, we have embedding functions running inside API service. This is not the case anymore, as the embedding has been moved to model server and with current iteration of inference and indexing model services, you have more choice to scale them individually.

Relational Database

Onyx uses PostgreSQL as mentioned above. Tables and indices are created using ORM sqlalchemy and data migration tool alembic

Document Index

The document database of choice is Vespa. Vespa is highly scalable DB developed once at Yahoo and since then has been shifting towards becoming independent.

Background Service

Background service is probably most compute-intensive out of all microservices. It is responsible for indexing data and removing obsolete data.

Scaling Infrastructure

Onyx documentation has suggestions for required hardware infrastructure to get started. This is good for use cases where you expect up to 100 users, most connections are done centrally for all users. For our use case, each user had own connectors for Email, calendars, files and such and additionally there were a lot of cases where group or department needed more connectors for their specific use case.

Hardware

While deciding for infrastructure upgrades, following points will be relevant:

- Single VM or Distributed: If you are hosting your stack in single VM, it is not bad practice per se. If you expect to index files once a day for all users and expect moderate amount of search/chat requests, this will be highly cost efficient. With docker volumes, data can be migrated from this form to distributed without loss, should you have need to scale up the infrastructure in future. Therefore, I recommend highly to start as single instance and outsource microservices as per your need.

- Performance of Microservice: Given varying functionalities of microservices, hardware needed differs as well. For services working with multiple workers like Nginx and PostgreSQL, more CPU cores correlate to much better performance. While services like Model Server benefit heavily from a GPU. And there is Vespa. Vespa stores tensors primarily in RAM and more data you ingest, more RAM you will need. With Onyx's default schema definitions, we needed 128GB RAM/TB of data across Vespa clusters. Additionally a faster disk will enable faster loading, should a node need to be restarted. This will improve downtimes.

- Autoscaling in Public Cloud: There can be distinct advantage using public cloud services like managed relational database services as it is easy to get started and there are options like autoscaling. If you are looking for less maintenance work and have got budget, all it would require working. We researched with Azure managed PostgreDB but we were not happy with added bit of latency and limited max connections. Therefore we decided to scale RDB in local cluster. Additionally, autoscaling obviously available to VMs too. We did not try this as we predicted unwanted interactions in how and when the autoscaling would be done and wanted to have complete control over such processes.

- Costs: Infrastructure upgrades are expensive and while doing cost assessments, it is highly recommended to check if possibility of programs like Azure savings plan apply to you. If you are setting up Onyx for special clients in industry like healthcare, you might even be able to get some exclusive sponsorships and funding.

Software

Onyx is built with an object oriented approach and therefore extending functionality is very easy. If you fork a specific version of an open source project, you might want to keep an eye on future updates regarding crucial functionalities. These are quintessential for security, stability and overall performance improvements.

- System Resources: While writing customizations, make sure you use system resources properly. For example, since you have finite number of connections to the database, you might want to tune connection pools in your connectors and use context managers to close a connection properly when it is no longer needed. These mistakes will be quite visible at scale and add up quickly. Same goes for loops, garbage collection and so on. On the other hand, you might want to make use of multi-cores and put a high focus on parallelism (especially while serving requests) using asynchronicity (async functions for example) with FastAPI routers and endpoints. Use threading to process iterable data, instead of loops. In connectors like Confluence, there is logic to continue indexing pages if indexing one page fails. This makes sure we utilize resource overhead needed to start an indexing attempt as efficiently as possible, as breaking the indexing attempt every time a single page fails would require the indexing to be re-attempted next time adding overhead and "wasting" resources in such a manner.

- Optimize Indexing: In this blogpost, I go over the complex theory of efficiently processing unstructured data for RAG. Since quality of indexed data linearly determines quality and accuracy of answers, making sure indexing is done correctly in an LLM-favorble format is crucial. Since we are talking about indexing, if you make modifications to relational database, make sure to add indices too! These are crucial for snappy queries and less congested database as a result.

- Update dependencies: Some libraries like

sentence-transformers regularly update libraries to improve performance. These are very crucial for performance upgrades from software side. - Evaluate Software Alternatives: It is highly recommended to check out different approaches. Why should you only use

PyTorchbackend while working withsentence-transformersand notJAX? What does OpenAI O1 do better than Claude 3.5 sonnet? Staying on top of recent developments will keep your software stack state-of-the-art. - LLMs: LLMs are a relatively straightforward part of RAG. They are increasingly getting better, have more rate limits and more context window. Evaluate usage, make sure you are on the right plans for different providers. Providers like Azure OpenAI help provision resources much better with PTUs which help optimize latency and costs.

- Get help: Do not be afraid to ask for help. Reach out in Onyx and Vespa Slack if you need help and there are some fantastic engineers doing an insane amount of work answering questions and helping others out. My experience has been overwhelmingly positive and I can only recommend joining both communities.

Observability

Make sure to add monitoring, evaluations and observability to your application stack to keep an overview of things. Costs and downtimes can get quickly out of hand, which in turn will make your users unhappy. At the end of the day, if users do not feel like they are getting things done faster, none of this matters and we have failed our assignment.

I have developed a custom tool to monitor and periodically evaluate answer quality (a little bit of write-up here) which I plan on open-sourcing soon. So keep an eye out and subscribe to my newsletter😉

Summary

In conclusion, these lessons helped us serve over 1000 users every day with a mean latency of 4.2 seconds during search over around 50TB of data. Over on chat side of things, mean latency to stream a full answer was around 7.3 seconds with max_tokens set to 1024. Most of this time lay in streaming actual answer rather than generating one. Accuracy measurements showed 90-100% correct search results with wrong answers mostly stemming from duplication of data (files with the same name at two different locations with different content). Here measures against hallucinations (such as prompt tuning, data de-duplication, tuning of weights of each source) helped very much.

Shipping it to production might be scary, especially at scale with confidential data, but it is also very rewarding. Every day I get thank you messages from colleagues who tell me how much time they have been saving since we launched our application.

I would like to thank everyone involved in aforementioned projects for insane amount of work they have done to change the way we interact with data and make it available to everyone.

Thank you for taking your time to read through this blog. If you would like to get in touch with me, you can either use the contact form or directly email me at bijay@regmi.dev. If you would like to be informed of my new blog posts, feel free to subscribe for the newsletter below. Stay assured. it will not be used for anything else.

Scaling a RAG-Application to Production